#sql merge command

Explore tagged Tumblr posts

Text

Top 10 ChatGPT Prompts For Software Developers

ChatGPT can do a lot more than just code creation and this blog post is going to be all about that. We have curated a list of ChatGPT prompts that will help software developers with their everyday tasks. ChatGPT can respond to questions and can compose codes making it a very helpful tool for software engineers.

While this AI tool can help developers with the entire SDLC (Software Development Lifecycle), it is important to understand how to use the prompts effectively for different needs.

Prompt engineering gives users accurate results. Since ChatGPT accepts prompts, we receive more precise answers. But a lot depends on how these prompts are formulated.

To Get The Best Out Of ChatGPT, Your Prompts Should Be:

Clear and well-defined. The more detailed your prompts, the better suggestions you will receive from ChatGPT.

Specify the functionality and programming language. Not specifying what you exactly need might not give you the desired results.

Phrase your prompts in a natural language, as if asking someone for help. This will make ChatGPT understand your problem better and give more relevant outputs.

Avoid unnecessary information and ambiguity. Keep it not only to the point but also inclusive of all important details.

Top ChatGPT Prompts For Software Developers

Let’s quickly have a look at some of the best ChatGPT prompts to assist you with various stages of your Software development lifecycle.

1. For Practicing SQL Commands;

2. For Becoming A Programming Language Interpreter;

3. For Creating Regular Expressions Since They Help In Managing, Locating, And Matching Text.

4. For Generating Architectural Diagrams For Your Software Requirements.

Prompt Examples: I want you to act as a Graphviz DOT generator, an expert to create meaningful diagrams. The diagram should have at least n nodes (I specify n in my input by writing [n], 10 being the default value) and to be an accurate and complex representation of the given input. Each node is indexed by a number to reduce the size of the output, should not include any styling, and with layout=neato, overlap=false, node [shape=rectangle] as parameters. The code should be valid, bugless and returned on a single line, without any explanation. Provide a clear and organized diagram, the relationships between the nodes have to make sense for an expert of that input. My first diagram is: “The water cycle [8]”.

5. For Solving Git Problems And Getting Guidance On Overcoming Them.

Prompt Examples: “Explain how to resolve this Git merge conflict: [conflict details].” 6. For Code generation- ChatGPT can help generate a code based on descriptions given by you. It can write pieces of codes based on the requirements given in the input. Prompt Examples: -Write a program/function to {explain functionality} in {programming language} -Create a code snippet for checking if a file exists in Python. -Create a function that merges two lists into a dictionary in JavaScript.

7. For Code Review And Debugging: ChatGPT Can Review Your Code Snippet And Also Share Bugs.

Prompt Examples: -Here’s a C# code snippet. The function is supposed to return the maximum value from the given list, but it’s not returning the expected output. Can you identify the problem? [Enter your code here] -Can you help me debug this error message from my C# program: [error message] -Help me debug this Python script that processes a list of objects and suggests possible fixes. [Enter your code here]

8. For Knowing The Coding Best Practices And Principles: It Is Very Important To Be Updated With Industry’s Best Practices In Coding. This Helps To Maintain The Codebase When The Organization Grows.

Prompt Examples: -What are some common mistakes to avoid when writing code? -What are the best practices for security testing? -Show me best practices for writing {concept or function} in {programming language}.

9. For Code Optimization: ChatGPT Can Help Optimize The Code And Enhance Its Readability And Performance To Make It Look More Efficient.

Prompt Examples: -Optimize the following {programming language} code which {explain the functioning}: {code snippet} -Suggest improvements to optimize this C# function: [code snippet] -What are some strategies for reducing memory usage and optimizing data structures?

10. For Creating Boilerplate Code: ChatGPT Can Help In Boilerplate Code Generation.

Prompt Examples: -Create a basic Java Spring Boot application boilerplate code. -Create a basic Python class boilerplate code

11. For Bug Fixes: Using ChatGPT Helps Fixing The Bugs Thus Saving A Large Chunk Of Time In Software Development And Also Increasing Productivity.

Prompt Examples: -How do I fix the following {programming language} code which {explain the functioning}? {code snippet} -Can you generate a bug report? -Find bugs in the following JavaScript code: (enter code)

12. Code Refactoring- ChatGPt Can Refactor The Code And Reduce Errors To Enhance Code Efficiency, Thus Making It Easier To Modify In The Future.

Prompt Examples –What are some techniques for refactoring code to improve code reuse and promote the use of design patterns? -I have duplicate code in my project. How can I refactor it to eliminate redundancy?

13. For Choosing Deployment Strategies- ChatGPT Can Suggest Deployment Strategies Best Suited For A Particular Project And To Ensure That It Runs Smoothly.

Prompt Examples -What are the best deployment strategies for this software project? {explain the project} -What are the best practices for version control and release management?

14. For Creating Unit Tests- ChatGPT Can Write Test Cases For You

Prompt Examples: -How does test-driven development help improve code quality? -What are some best practices for implementing test-driven development in a project? These were some prompt examples for you that we sourced on the basis of different requirements a developer can have. So whether you have to generate a code or understand a concept, ChatGPT can really make a developer’s life by doing a lot of tasks. However, it certainly comes with its own set of challenges and cannot always be completely correct. So it is advisable to cross-check the responses. Hope this helps. Visit us- Intelliatech

#ChatGPT prompts#Developers#Terminal commands#JavaScript console#API integration#SQL commands#Programming language interpreter#Regular expressions#Code debugging#Architectural diagrams#Performance optimization#Git merge conflicts#Prompt engineering#Code generation#Code refactoring#Debugging#Coding best practices#Code optimization#Code commenting#Boilerplate code#Software developers#Programming challenges#Software documentation#Workflow automation#SDLC (Software Development Lifecycle)#Project planning#Software requirements#Design patterns#Deployment strategies#Security testing

0 notes

Text

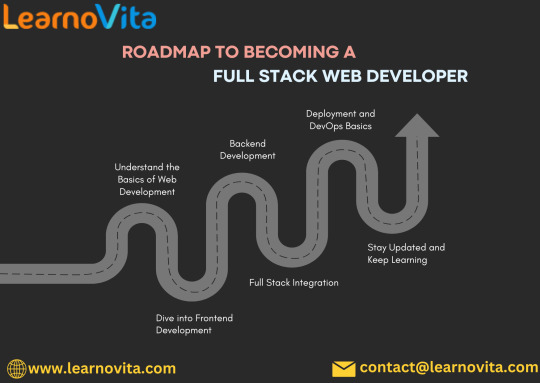

java full stack

A Java Full Stack Developer is proficient in both front-end and back-end development, using Java for server-side (backend) programming. Here's a comprehensive guide to becoming a Java Full Stack Developer:

1. Core Java

Fundamentals: Object-Oriented Programming, Data Types, Variables, Arrays, Operators, Control Statements.

Advanced Topics: Exception Handling, Collections Framework, Streams, Lambda Expressions, Multithreading.

2. Front-End Development

HTML: Structure of web pages, Semantic HTML.

CSS: Styling, Flexbox, Grid, Responsive Design.

JavaScript: ES6+, DOM Manipulation, Fetch API, Event Handling.

Frameworks/Libraries:

React: Components, State, Props, Hooks, Context API, Router.

Angular: Modules, Components, Services, Directives, Dependency Injection.

Vue.js: Directives, Components, Vue Router, Vuex for state management.

3. Back-End Development

Java Frameworks:

Spring: Core, Boot, MVC, Data JPA, Security, Rest.

Hibernate: ORM (Object-Relational Mapping) framework.

Building REST APIs: Using Spring Boot to build scalable and maintainable REST APIs.

4. Database Management

SQL Databases: MySQL, PostgreSQL (CRUD operations, Joins, Indexing).

NoSQL Databases: MongoDB (CRUD operations, Aggregation).

5. Version Control/Git

Basic Git commands: clone, pull, push, commit, branch, merge.

Platforms: GitHub, GitLab, Bitbucket.

6. Build Tools

Maven: Dependency management, Project building.

Gradle: Advanced build tool with Groovy-based DSL.

7. Testing

Unit Testing: JUnit, Mockito.

Integration Testing: Using Spring Test.

8. DevOps (Optional but beneficial)

Containerization: Docker (Creating, managing containers).

CI/CD: Jenkins, GitHub Actions.

Cloud Services: AWS, Azure (Basics of deployment).

9. Soft Skills

Problem-Solving: Algorithms and Data Structures.

Communication: Working in teams, Agile/Scrum methodologies.

Project Management: Basic understanding of managing projects and tasks.

Learning Path

Start with Core Java: Master the basics before moving to advanced concepts.

Learn Front-End Basics: HTML, CSS, JavaScript.

Move to Frameworks: Choose one front-end framework (React/Angular/Vue.js).

Back-End Development: Dive into Spring and Hibernate.

Database Knowledge: Learn both SQL and NoSQL databases.

Version Control: Get comfortable with Git.

Testing and DevOps: Understand the basics of testing and deployment.

Resources

Books:

Effective Java by Joshua Bloch.

Java: The Complete Reference by Herbert Schildt.

Head First Java by Kathy Sierra & Bert Bates.

Online Courses:

Coursera, Udemy, Pluralsight (Java, Spring, React/Angular/Vue.js).

FreeCodeCamp, Codecademy (HTML, CSS, JavaScript).

Documentation:

Official documentation for Java, Spring, React, Angular, and Vue.js.

Community and Practice

GitHub: Explore open-source projects.

Stack Overflow: Participate in discussions and problem-solving.

Coding Challenges: LeetCode, HackerRank, CodeWars for practice.

By mastering these areas, you'll be well-equipped to handle the diverse responsibilities of a Java Full Stack Developer.

visit https://www.izeoninnovative.com/izeon/

2 notes

·

View notes

Link

SQL Merge Statement - EVIL or DEVIL:

Q01. What is the MERGE Statement in SQL? Q02. Why separate MERGE statements if we have already INSERT, UPDATE, and DELETE commands? Q03. What are the different databases that support the SQL MERGE Statement? Q04. What are the different MERGE Statement Scenarios for DML? Q05. What are the different supported MERGE Statement Clauses in MSSQL? Q06. What will happen if more than one row matches the MERGE Statement? Q07. What are the fundamental rules of SQL MERGE Statement? Q08. What is the use of the TOP and OUTPUT clauses in the SQL Merge Statement? Q09. How can you use the Merge Statement in MSSQL? Q10. How does the SQL MERGE command work in MSSQL? Q11. How can you write the query for the SQL Merge? Q12. Is SQL MERGE Statement Safe to use?

Please visit the following link for the answers:

#crackjob#techpoint#techpointfunda#techpointfundamentals#sqlinterview#sqlmerge#evilofsqlmerge#devilofsqlmerge

4 notes

·

View notes

Text

Cross-Mapping Tableau Prep Workflows into Power Query: A Developer’s Blueprint

When migrating from Tableau to Power BI, one of the most technically nuanced challenges is translating Tableau Prep workflows into Power Query in Power BI. Both tools are built for data shaping and preparation, but they differ significantly in structure, functionality, and logic execution. For developers and BI engineers, mastering this cross-mapping process is essential to preserve the integrity of ETL pipelines during the migration. This blog offers a developer-centric blueprint to help you navigate this transition with clarity and precision.

Understanding the Core Differences

At a foundational level, Tableau Prep focuses on a flow-based, visual paradigm where data steps are connected in a linear or branching path. Power Query, meanwhile, operates in a functional, stepwise M code environment. While both support similar operations—joins, filters, aggregations, data type conversions—the implementation logic varies.

In Tableau Prep:

Actions are visual and sequential (Clean, Join, Output).

Operations are visually displayed in a flow pane.

Users rely heavily on drag-and-drop transformations.

In Power Query:

Transformations are recorded as a series of applied steps using the M language.

Logic is encapsulated within functional scripts.

The interface supports formula-based flexibility.

Step-by-Step Mapping Blueprint

Here’s how developers can strategically cross-map common Tableau Prep components into Power Query steps:

1. Data Input Sources

Tableau Prep: Uses connectors or extracts to pull from databases, Excel, or flat files.

Power Query Equivalent: Use “Get Data” with the appropriate connector (SQL Server, Excel, Web, etc.) and configure using the Navigator pane.

✅ Developer Tip: Ensure all parameters and credentials are migrated securely to avoid broken connections during refresh.

2. Cleaning and Shaping Data

Tableau Prep Actions: Rename fields, remove nulls, change types, etc.

Power Query Steps: Use commands like Table.RenameColumns, Table.SelectRows, and Table.TransformColumnTypes.

✅ Example: Tableau Prep’s “Change Data Type” ↪ Power Query:

mCopy

Edit

Table.TransformColumnTypes(Source,{{"Date", type date}})

3. Joins and Unions

Tableau Prep: Visual Join nodes with configurations (Inner, Left, Right).

Power Query: Use Table.Join or the Merge Queries feature.

✅ Equivalent Code Snippet:

mCopy

Edit

Table.NestedJoin(TableA, {"ID"}, TableB, {"ID"}, "NewColumn", JoinKind.Inner)

4. Calculated Fields / Derived Columns

Tableau Prep: Create Calculated Fields using simple functions or logic.

Power Query: Use “Add Column” > “Custom Column” and M code logic.

✅ Tableau Formula Example: IF [Sales] > 100 THEN "High" ELSE "Low" ↪ Power Query:

mCopy

Edit

if [Sales] > 100 then "High" else "Low"

5. Output to Destination

Tableau Prep: Output to .hyper, Tableau Server, or file.

Power BI: Load to Power BI Data Model or export via Power Query Editor to Excel or CSV.

✅ Developer Note: In Power BI, outputs are loaded to the model; no need for manual exports unless specified.

Best Practices for Developers

Modularize: Break complex Prep flows into multiple Power Query queries to enhance maintainability.

Comment Your Code: Use // to annotate M code for easier debugging and team collaboration.

Use Parameters: Replace hardcoded values with Power BI parameters to improve reusability.

Optimize for Performance: Apply filters early in Power Query to reduce data volume.

Final Thoughts

Migrating from Tableau Prep to Power Query isn’t just a copy-paste process—it requires thoughtful mapping and a clear understanding of both platforms’ paradigms. With this blueprint, developers can preserve logic, reduce data preparation errors, and ensure consistency across systems. Embrace this cross-mapping journey as an opportunity to streamline and modernize your BI workflows.

For more hands-on migration strategies, tools, and support, explore our insights at https://tableautopowerbimigration.com – powered by OfficeSolution.

0 notes

Text

🚀 Boost Your SQL Game with MERGE! Looking to streamline your database operations? The SQL MERGE statement is a powerful tool that lets you INSERT, UPDATE, or DELETE data in a single, efficient command. 💡 Whether you're syncing tables, managing data warehouses, or simplifying ETL processes — MERGE can save you time and reduce complexity.

📖 In our latest blog, we break down: 🔹 What SQL MERGE is 🔹 Real-world use cases 🔹 Syntax with clear examples 🔹 Best practices & common pitfalls

Don't just code harder — code smarter. 💻 👉 https://www.tutorialgateway.org/sql-merge-statement/

0 notes

Text

Unlocking the Secrets to Full Stack Web Development

Full stack web development is a multifaceted discipline that combines creativity with technical skills. If you're eager to unlock the secrets of this dynamic field, this blog will guide you through the essential components, skills, and strategies to become a successful full stack developer.

For those looking to enhance their skills, Full Stack Developer Course in Bangalore programs offer comprehensive education and job placement assistance, making it easier to master this tool and advance your career.

What Does Full Stack Development Entail?

Full stack development involves both front-end and back-end technologies. Full stack developers are equipped to build entire web applications, managing everything from user interface design to server-side logic and database management.

Core Skills Every Full Stack Developer Should Master

1. Front-End Development

The front end is the part of the application that users interact with. Key skills include:

HTML: The markup language for structuring web content.

CSS: Responsible for the visual presentation and layout.

JavaScript: Adds interactivity and functionality to web pages.

Frameworks: Familiarize yourself with front-end frameworks like React, Angular, or Vue.js to speed up development.

2. Back-End Development

The back end handles the server-side logic and database interactions. Important skills include:

Server-Side Languages: Learn languages such as Node.js, Python, Ruby, or Java.

Databases: Understand both SQL (MySQL, PostgreSQL) and NoSQL (MongoDB) databases.

APIs: Learn how to create and consume RESTful and GraphQL APIs for data exchange.

3. Version Control Systems

Version control is essential for managing code changes and collaborating with others. Git is the industry standard. Familiarize yourself with commands and workflows.

4. Deployment and Hosting

Knowing how to deploy applications is critical. Explore:

Cloud Platforms: Get to know services like AWS, Heroku, or DigitalOcean for hosting.

Containerization: Learn about Docker to streamline application deployment.

With the aid of Best Online Training & Placement programs, which offer comprehensive training and job placement support to anyone looking to develop their talents, it’s easier to learn this tool and advance your career.

Strategies for Success

Step 1: Start with the Fundamentals

Begin your journey by mastering HTML, CSS, and JavaScript. Utilize online platforms like Codecademy, freeCodeCamp, or MDN Web Docs to build a strong foundation.

Step 2: Build Real Projects

Apply what you learn by creating real-world projects. Start simple with personal websites or small applications. This hands-on experience will solidify your understanding.

Step 3: Explore Front-End Frameworks

Once you’re comfortable with the basics, dive into a front-end framework like React or Angular. These tools will enhance your development speed and efficiency.

Step 4: Learn Back-End Technologies

Choose a back-end language and framework that interests you. Node.js with Express is a great choice for JavaScript fans. Build RESTful APIs and connect them to a database.

Step 5: Master Git and Version Control

Understand the ins and outs of Git. Practice branching, merging, and collaboration on platforms like GitHub to enhance your workflow.

Step 6: Dive into Advanced Topics

As you progress, explore advanced topics such as:

Authentication and Authorization: Learn how to secure your applications.

Performance Optimization: Improve the speed and efficiency of your applications.

Security Best Practices: Protect your applications from common vulnerabilities.

Building Your Portfolio

Create a professional portfolio that showcases your skills and projects. Include:

Project Descriptions: Highlight the technologies used and your role in each project.

Blog: Share your learning journey or insights on technical topics.

Resume: Keep it updated to reflect your skills and experiences.

Networking and Job Opportunities

Engage with the tech community through online forums, meetups, and networking events. Building connections can lead to job opportunities, mentorship, and collaborations.

Conclusion

Unlocking the secrets to full stack web development is a rewarding journey that requires commitment and continuous learning. By mastering the essential skills and following the strategies outlined in this blog, you’ll be well on your way to becoming a successful full stack developer. Embrace the challenges, keep coding, and enjoy the adventure.

0 notes

Text

How a Full Stack Developer Course Prepares You for Real-World Projects

The tech world is evolving rapidly—and so are the roles within it. One role that continues to grow in demand is that of a full-stack developer. These professionals are the backbone of modern web and software development. But what exactly does it take to become one? Enrolling in a full-stack developer course can be a game-changer, especially if you're someone who enjoys both the creative and logical sides of building digital solutions.

In this article, we'll explore the top 7 skills you’ll master in a full-stack developer course—skills that not only make you job-ready but also turn you into a valuable tech asset.

1. Front-End Development

Let’s face it: first impressions matter. The front-end is what users see and interact with. You’ll dive deep into the languages and frameworks that make websites beautiful and functional.

You’ll learn:

HTML5 and CSS3 for content and layout structuring.

JavaScript and DOM manipulation for interactivity.

Frameworks like React.js, Angular, or Vue.js for scalable user interfaces.

Responsive design using Bootstrap or Tailwind CSS.

You’ll go from building static web pages to creating dynamic, responsive user experiences that work across all devices.

2. Back-End Development

Once the front-end looks good, the back-end makes it work. You’ll learn to build and manage server-side applications that drive the logic, data, and security behind the interface.

Key skills include:

Server-side languages like Node.js, Python (Django/Flask), or Java (Spring Boot).

Building RESTful APIs and handling HTTP requests.

Managing user authentication, data validation, and error handling.

This is where you start to appreciate how things work behind the scenes—from processing a login request to fetching product data from a database.

3. Database Management

Data is the lifeblood of any application. A full-stack developer must know how to store, retrieve, and manipulate data effectively.

Courses will teach you:

Working with SQL databases like MySQL or PostgreSQL.

Understanding NoSQL options like MongoDB.

Designing and optimising data models.

Writing CRUD operations and joining tables.

By mastering databases, you’ll be able to support both small applications and large-scale enterprise systems.

4. Version Control with Git and GitHub

If you’ve ever made a change and broken your code (we’ve all been there!), version control will be your best friend. It helps you track and manage code changes efficiently.

You’ll learn:

Using Git commands to track, commit, and revert changes.

Collaborating on projects using GitHub.

Branching and merging strategies for team-based development.

These skills are not just useful—they’re essential in any collaborative coding environment.

5. Deployment and DevOps Basics

Building an app is only half the battle. Knowing how to deploy it is what makes your work accessible to the world.

Expect to cover:

Hosting apps using Heroku, Netlify, or Vercel.

Basics of CI/CD pipelines.

Cloud platforms like AWS, Google Cloud, or Azure.

Using Docker for containerisation.

Deployment transforms your local project into a living, breathing product on the internet.

6. Problem Solving and Debugging

This is the unspoken art of development. Debugging makes you patient, sharp, and detail-orientated. It’s the difference between a good developer and a great one.

You’ll master

Using browser developer tools.

Analysing error logs and debugging back-end issues.

Writing clean, testable code.

Applying logical thinking to fix bugs and optimise performance.

These problem-solving skills become second nature with practice—and they’re highly valued in the real world.

7. Project Management and Soft Skills

A good full-stack developer isn’t just a coder—they’re a communicator and a team player. Most courses now incorporate soft skills and project-based learning to mimic real work environments.

Expect to develop:

Time management and task prioritisation.

Working in agile environments (Scrum, Kanban).

Collaboration skills through group projects.

Creating portfolio-ready applications with documentation.

By the end of your course, you won’t just have skills—you’ll have confidence and real-world project experience.

Why These Skills Matter

The top 7 skills you’ll master in a full-stack developer course are a balanced mix of hard and soft skills. Together, they prepare you for a versatile role in startups, tech giants, freelance work, or your own entrepreneurial ventures.

Here’s why they’re so powerful:

You can work on both front-end and back-end—making you highly employable.

You’ll gain independence and control over full product development.

You’ll be able to communicate better across departments—design, QA, DevOps, and business.

Conclusion

Choosing to become a full-stack developer is like signing up for a journey of continuous learning. The right course gives you structured learning, industry-relevant projects, and hands-on experience.

Whether you're switching careers, enhancing your skill set, or building your first startup, these top 7 skills you’ll master in a Full Stack Developer course will set you on the right path.

So—are you ready to become a tech all-rounder?

0 notes

Text

UNION and UNION ALL in ARSQL Language

Mastering UNION and UNION ALL in ARSQL Language for Data Combination Hello, Redshift and ARSQL enthusiasts! In this post, we’re going to explore UNION in ARSQL Language -one of the most powerful features of SQL combining datasets using UNION and UNION ALL in the ARSQL Language. These commands are essential when you want to merge results from multiple queries into a single, unified output.…

0 notes

Text

SAS Tutorial for Researchers: Streamlining Your Data Analysis Process

Researchers often face the challenge of managing large datasets, performing complex statistical analyses, and interpreting results in meaningful ways. Fortunately, SAS programming offers a robust solution for handling these tasks. With its ability to manipulate, analyze, and visualize data, SAS is a valuable tool for researchers across various fields, including social sciences, healthcare, and business. In this SAS tutorial, we will explore how SAS programming can streamline the data analysis process for researchers, helping them turn raw data into meaningful insights.

1. Getting Started with SAS for Research

For researchers, SAS programming can seem intimidating at first. However, with the right guidance, it becomes an invaluable tool for data management and analysis. To get started, you need to:

Understand the SAS Environment: Familiarize yourself with the interface, where you'll be performing data steps, running procedures, and viewing output.

Learn Basic Syntax: SAS uses a straightforward syntax where each task is organized into steps (Data steps and Procedure steps). Learning how to structure these steps is the foundation of using SAS effectively.

2. Importing and Preparing Data

The first step in any analysis is preparing your data. SAS makes it easy to import data from a variety of sources, such as Excel files, CSVs, and SQL databases. The SAS tutorial for researchers focuses on helping you:

Import Data: Learn how to load data into SAS using commands like PROC IMPORT.

Clean Data: Clean your data by removing missing values, handling outliers, and transforming variables as needed.

Merge Datasets: Combine multiple datasets into one using SAS’s MERGE or SET statements.

Having clean, well-organized data is crucial for reliable results, and SAS simplifies this process with its powerful data manipulation features.

3. Conducting Statistical Analysis with SAS

Once your data is ready, the next step is performing statistical analysis. SAS offers a wide array of statistical procedures that researchers can use to analyze their data:

Descriptive Statistics: Calculate basic statistics like mean, median, standard deviation, and range to understand your dataset’s characteristics.

Inferential Statistics: Perform hypothesis tests, t-tests, ANOVA, and regression analysis to make data-driven conclusions.

Multivariate Analysis: SAS also supports more advanced techniques, like factor analysis and cluster analysis, which are helpful for identifying patterns or grouping similar observations.

This powerful suite of statistical tools allows researchers to conduct deep, complex analyses without the need for specialized software

4. Visualizing Results

Data visualization is an essential part of the research process. Communicating complex results clearly can help others understand your findings. SAS includes a variety of charting and graphing tools that can help you present your data effectively:

Graphs and Plots: Create bar charts, line graphs, histograms, scatter plots, and more.

Customized Output: Use SAS’s graphical procedures to format your visualizations to suit your presentation or publication needs.

These visualization tools allow researchers to present data in a way that’s both understandable and impactful.

5. Automating Research Workflows with SAS

Another benefit of SAS programming for researchers is the ability to automate repetitive tasks. Using SAS’s macro functionality, you can:

Create Reusable Code: Build macros for tasks you perform frequently, saving time and reducing the chance of errors.

Automate Reporting: Automate the process of generating reports, so you don’t have to manually create tables or charts for every analysis.

youtube

Automation makes it easier for researchers to focus on interpreting results and less on performing routine tasks.

Conclusion

The power of SAS programming lies in its ability to simplify complex data analysis tasks, making it a valuable tool for researchers. By learning the basics of SAS, researchers can easily import, clean, analyze, and visualize data while automating repetitive tasks. Whether you're analyzing survey data, clinical trial results, or experimental data, SAS has the tools and features you need to streamline your data analysis process. With the help of SAS tutorials, researchers can quickly master the platform and unlock the full potential of their data.

#sas tutorial#sas tutorial for beginners#sas programming tutorial#SAS Tutorial for Researchers#Youtube

0 notes

Text

Revolutionizing Data Wrangling with Ask On Data: The Future of AI-Driven Data Engineering

Data wrangling, the process of cleaning, transforming, and structuring raw data into a usable format, has always been a critical yet time-consuming task in data engineering. With the increasing complexity and volume of data, data wrangling tool have become indispensable in streamlining these processes. One tool that is revolutionizing the way data engineers approach this challenge is Ask On Data—an open-source, AI-powered, chat-based platform designed to simplify data wrangling for professionals across industries.

The Need for an Efficient Data Wrangling Tool

Data engineers often face a variety of challenges when working with large datasets. Raw data from different sources can be messy, incomplete, or inconsistent, requiring significant effort to clean and transform. Traditional data wrangling tools often involve complex coding and manual intervention, leading to long processing times and a higher risk of human error. With businesses relying more heavily on data-driven decisions, there's an increasing need for more efficient, automated, and user-friendly solutions.

Enter Ask On Data—a cutting-edge data wrangling tool that leverages the power of generative AI to make data cleaning, transformation, and integration seamless and faster than ever before. With Ask On Data, data engineers no longer need to manually write extensive code to prepare data for analysis. Instead, the platform uses AI-driven conversations to assist users in cleaning and transforming data, allowing for a more intuitive and efficient approach to data wrangling.

How Ask On Data Transforms Data Engineering

At its core, Ask On Data is designed to simplify the data wrangling process by using a chat-based interface, powered by advanced generative AI models. Here’s how the tool revolutionizes data engineering:

Intuitive Interface: Unlike traditional data wrangling tools that require specialized knowledge of coding languages like Python or SQL, Ask On Data allows users to interact with their data using natural language. Data engineers can ask questions, request data transformations, and specify the desired output, all through a simple chat interface. The AI understands these requests and performs the necessary actions, significantly reducing the learning curve for users.

Automated Data Cleaning: One of the most time-consuming aspects of data wrangling is identifying and fixing errors in raw data. Ask On Data leverages AI to automatically detect inconsistencies, missing values, and duplicates within datasets. The platform then offers suggestions or automatically applies the necessary transformations, drastically speeding up the data cleaning process.

Data Transformation: Ask On Data's AI is not just limited to data cleaning; it also assists in transforming and reshaping data according to the user's specifications. Whether it's aggregating data, pivoting tables, or merging multiple datasets, the tool can perform these tasks with a simple command. This not only saves time but also reduces the likelihood of errors that often arise during manual data manipulation.

Customizable Workflows: Every data project is different, and Ask On Data understands that. The platform allows users to define custom workflows, automating repetitive tasks, and ensuring consistency across different datasets. Data engineers can configure the tool to handle specific data requirements and transformations, making it an adaptable solution for a variety of data engineering challenges.

Seamless Collaboration: Ask On Data’s chat-based interface also fosters better collaboration between teams. Multiple users can interact with the tool simultaneously, sharing queries, suggestions, and results in real time. This collaborative approach enhances productivity and ensures that the team is always aligned in their data wrangling efforts.

Why Ask On Data is the Future of Data Engineering

The future of data engineering lies in automation and artificial intelligence, and Ask On Data is at the forefront of this revolution. By combining the power of generative AI with a user-friendly interface, it makes complex data wrangling tasks more accessible and efficient than ever before. As businesses continue to generate more data, the demand for tools like Ask On Data will only increase, enabling data engineers to spend less time wrangling data and more time analysing it.

Conclusion

Ask On Data is not just another data wrangling tool—it's a game-changer for data engineers. With its AI-powered features, natural language processing capabilities, and automation of repetitive tasks, Ask On Data is setting a new standard in data engineering. For organizations looking to harness the full potential of their data, Ask On Data is the key to unlocking faster, more accurate, and more efficient data wrangling processes.

0 notes

Text

Must-Have Skills for Job in Data Science Career at the Best Data Science Institute in Laxmi Nagar

Understanding Data Science and Its Growing Scope

Data science is transforming industries by enabling organizations to make informed decisions based on data-driven insights. From healthcare to finance, e-commerce to entertainment, every sector today relies on data science for better efficiency and profitability.

With the rise of artificial intelligence and machine learning, the demand for skilled data scientists is at an all-time high. According to reports from IBM and World Economic Forum, data science is among the fastest-growing fields, with millions of new job openings expected in the coming years. Companies worldwide are looking for professionals who can analyze complex data and provide actionable solutions.

If you are planning to enter this dynamic field, choosing the best data science institute in Laxmi Nagar is crucial. Modulation Digital offers a structured and job-oriented program that ensures deep learning and hands-on experience. With 100% job assurance and real-world exposure through live projects in our in-house internship, students gain practical expertise that sets them apart in the job market.

5 Essential Skills Needed to Excel in Data Science

1. Mastering Programming Languages for Data Science

Programming is the backbone of data science. A strong command over programming languages like Python and R is essential, as they provide a wide range of libraries and frameworks tailored for data manipulation, analysis, and machine learning.

Key Aspects to Focus On:

Python: Used for data analysis, web scraping, and deep learning applications with libraries like NumPy, Pandas, Matplotlib, and Scikit-learn.

R: Preferred for statistical computing and visualization.

SQL: Essential for querying databases and handling structured data.

Version Control (Git): Helps track changes in code and collaborate effectively with teams.

At Modulation Digital, students receive intensive hands-on training in Python, R, and SQL, ensuring they are job-ready with practical knowledge and coding expertise.

2. Understanding Statistics and Mathematics

A strong foundation in statistics, probability, and linear algebra is crucial for analyzing patterns in data and developing predictive models. Many data science problems involve statistical analysis and mathematical computations to derive meaningful insights.

Core Mathematical Concepts:

Probability and Distributions: Understanding normal, binomial, and Poisson distributions helps in making statistical inferences.

Linear Algebra: Essential for working with vectors, matrices, and transformations in machine learning algorithms.

Calculus: Helps in optimizing machine learning models and understanding gradient descent.

Hypothesis Testing: Used to validate assumptions and make data-driven decisions.

Students at Modulation Digital get hands-on practice with statistical methods and problem-solving exercises, ensuring they understand the theoretical concepts and apply them effectively.

3. Data Wrangling and Preprocessing

Real-world data is often incomplete, inconsistent, and unstructured. Data wrangling refers to the process of cleaning and structuring raw data for effective analysis.

Key Techniques in Data Wrangling:

Handling Missing Data: Using imputation techniques like mean, median, or predictive modeling.

Data Normalization and Transformation: Ensuring consistency across datasets.

Feature Engineering: Creating new variables from existing data to improve model performance.

Data Integration: Merging multiple sources of data for a comprehensive analysis.

At Modulation Digital, students work on live datasets, learning how to clean, structure, and prepare data efficiently for analysis.

4. Machine Learning and AI Integration

Machine learning enables computers to learn patterns and make predictions. Understanding supervised, unsupervised, and reinforcement learning is crucial for building intelligent systems.

Important Machine Learning Concepts:

Regression Analysis: Linear and logistic regression models for prediction.

Classification Algorithms: Decision trees, SVM, and random forests.

Neural Networks and Deep Learning: Understanding CNNs, RNNs, and GANs.

Natural Language Processing (NLP): Used for text analysis and chatbots.

At Modulation Digital, students get hands-on experience in building AI-driven applications with frameworks like TensorFlow and PyTorch, preparing them for industry demands.

5. Data Visualization and Storytelling

Data visualization is essential for presenting insights in a clear and compelling manner. Effective storytelling through data helps businesses make better decisions.

Key Visualization Tools:

Tableau and Power BI: Business intelligence tools for interactive dashboards.

Matplotlib and Seaborn: Used in Python for statistical plotting.

D3.js: JavaScript library for creating dynamic data visualizations.

Dash and Streamlit: Tools for building web-based analytical applications.

At Modulation Digital, students learn how to create interactive dashboards and compelling data reports, ensuring they can communicate their findings effectively.

Support from Leading Organizations in Data Science

Global tech giants such as Google, Amazon, and IBM invest heavily in data science and continuously shape industry trends. Harvard Business Review has called data science the "sexiest job of the 21st century," highlighting its importance in today’s world.

Modulation Digital ensures that its curriculum aligns with these global trends. Additionally, our program prepares students for globally recognized certifications, increasing their credibility in the job market.

Why the Right Training Matters

A successful career in data science requires the right mix of technical knowledge, hands-on experience, and industry insights. Choosing the best data science institute in Laxmi Nagar ensures that you get a structured and effective learning environment.

Why Modulation Digital is the Best Choice for Learning Data Science

Selecting the right institute can define your career trajectory. Modulation Digital stands out as the best data science institute in Laxmi Nagar for several reasons:

1. Industry-Relevant Curriculum

Our program is designed in collaboration with industry experts and follows the latest advancements in data science, artificial intelligence, and machine learning.

2. Hands-on Learning with Live Projects

We believe in practical education. Students work on real-world projects during their in-house internship, which strengthens their problem-solving skills.

3. 100% Job Assurance

We provide placement support with top organizations, ensuring that every student gets a strong start in their career.

4. Expert Faculty and Mentorship

Data Science Trainer

Mr. Prem Kumar

Mr. Prem Kumar is a seasoned Data Scientist with over 6+ years of professional experience in data analytics, machine learning, and artificial intelligence. With a strong academic foundation and practical expertise, he has mastered a variety of tools and technologies essential for data science, including Microsoft Excel, Python, SQL, Power BI, Tableau, and advanced AI concepts like machine learning and deep learning.

As a trainer, Mr. Prem is highly regarded for his engaging teaching style and his knack for simplifying complex data science concepts. He emphasizes hands-on learning, ensuring students gain practical experience to tackle real-world challenges confidently.

5. Certifications and Career Support

Get certified by Modulation Digital, along with guidance for global certifications from IBM, Coursera, and Harvard Online, making your resume stand out.

If you are ready to kickstart your data science career, enroll at Modulation Digital today and gain the skills that top companies demand!

#DataScience#CareerInDataScience#ModulationDigital#DataScienceInstitute#JobAssurance#LiveProjectExperience#DataScienceSkills#IndustryInsights#HighPayingJobs#UnlockYourFuture#digital marketing

0 notes

Text

Data Analytics Toolbox: Essential Skills to Master by 2025

As data continues to drive decision-making in every business, mastering data analytics becomes more important than ever for ambitious professionals. Students preparing to enter this dynamic sector must have a firm foundation in the necessary tools and abilities. Here, we describe the most important data analytics skills to learn in 2025, explain their significance, and provide a road map for building a versatile and relevant analytics toolkit.

1. Programming languages: Python and R

Python and R are the two most popular programming languages in data analytics, with each having distinct strengths and capabilities.

Python: The preferred language for data analysis, data manipulation, and machine learning, Python is well-known for its readability, adaptability, and extensive library. Libraries like Scikit-Learn for machine learning, NumPy for numerical calculations, and Pandas for data manipulation give analysts the strong tools they need to work effectively with big datasets.

R: Widely used in research and academia, R is used for data visualisation and statistical analysis. It is a strong choice for statistical analysis and for producing detailed, publication-ready visualizations thanks to its packages, like ggplot2 for visualization and dplyr for data processing.

Why It Matters: Students who are proficient in Python and R are able to manage a variety of analytical activities. While R's statistical capabilities can improve analysis, especially in professions that focus on research, Python is particularly useful for general-purpose data analytics.

2. Structured Query Language, or SQL

Data analysts can efficiently retrieve and manage data by using SQL, a fundamental ability for querying and maintaining relational databases.

SQL Fundamentals: Data analysts can manipulate data directly within databases by mastering the core SQL commands (SELECT, INSERT, UPDATE, and DELETE), which are necessary for retrieving and analyzing data contained in relational databases.

Advanced SQL Techniques: When working with structured data, SQL is a tremendous help. Proficiency in JOIN operations (for merging tables), window functions, and subqueries is essential for more complicated data chores.

Why It Matters: The main tool for retrieving and examining data kept in relational databases is SQL. Since almost all organizations store their data in SQL-based systems, analysts in nearly every data-focused position must be proficient in SQL.

3. Data Preparation and Cleaning

Cleaning, converting, and organizing data for analysis is known as "data wrangling," or data preparation, and it is an essential first step in the analytics process.

Managing Outliers and Missing Values: Accurate analysis relies on knowing how to handle outliers and missing values.

Data Transformation Techniques: By ensuring that data is in a format that machine learning algorithms can understand, abilities like normalization, standardization, and feature engineering serve to improve model accuracy.

Why It Matters: Analysts invest a lot of effort on cleaning and preparing data for any data analytics project. An accurate, reliable, and error-free analysis is guaranteed by efficient data preparation.

4. Visualization of Data

Complex datasets are transformed into understandable, relevant pictures through data visualization, which facilitates narrative and decision-making.

Visualization Libraries: Analysts may produce educational, expert-caliber charts, graphs, and interactive dashboards by learning to use tools like Matplotlib, Seaborn, Plotly (Python), and ggplot2 (R).

Data Storytelling: To effectively communicate findings, data analysts need to hone their storytelling abilities in addition to producing images. An effective analyst is able to create narratives from data that help decision-makers make decisions.

Why It Matters: Insights can be effectively communicated through visualizations. By becoming proficient in data visualization, analysts may communicate findings to stakeholders in a way that is compelling, accessible, and actionable.

5. Fundamentals of Machine Learning

Data analysts are finding that machine learning (ML) abilities are becoming more and more useful, especially as companies seek for predictive insights to gain a competitive edge.

Supervised and Unsupervised Learning: To examine and decipher patterns in data, analysts need to be familiar with the fundamentals of both supervised (such as regression and classification) and unsupervised (such as clustering and association) learning.

Well-known Machine Learning Libraries: Scikit-Learn (Python) and other libraries make basic ML models easily accessible, enabling analysts to create predictive models with ease.

Why It Matters: By offering deeper insights and predictive skills, machine learning may improve data analysis. This is especially important in industries where predicting trends is critical, such as marketing, e-commerce, finance, and healthcare.

6. Technologies for Big Data

As big data grows, businesses want analytics tools that can effectively manage enormous datasets. Big data tool knowledge has grown in popularity as a highly sought-after ability.

Hadoop and Spark: Working with big data at scale is made easier for analysts who are familiar with frameworks like Apache Hadoop and Apache Spark.

NoSQL databases: An analyst's capacity to handle unstructured and semi-structured data is enhanced by knowledge of NoSQL databases such as MongoDB and Cassandra.

Why It Matters: Data volumes in many businesses beyond the capacity of conventional processing. In order to meet industrial expectations, big data technologies give analysts the means to handle and examine enormous datasets.

7. Probability and Statistics

Accurately evaluating the findings of data analysis and drawing reliable conclusions require a solid foundation in probability and statistics.

Important Ideas: By understanding probability distributions, confidence intervals, and hypothesis testing, analysts can apply statistical concepts to actual data.

Useful Applications: Variance analysis, statistical significance, and sampling techniques are essential for data-driven decision-making.

Why It Is Important: Analysts can assess the reliability of their data, recognise trends, and formulate well-informed predictions with the use of statistical skills. Accurate and significant analysis is based on this knowledge.

8. Communication and Critical Thinking Soft Skills

Technical proficiency alone is insufficient. Proficient critical thinking and communication capabilities distinguish outstanding analysts.

Communication Skills: To ensure that their insights are understood and useful, analysts must effectively communicate their findings to both technical and non-technical audiences.

Problem-Solving: Critical thinking allows analysts to approach problems methodically, assessing data objectively and providing insightful solutions.

Why It Matters: In the end, data analytics is about making smarter decisions possible. Effective data interpreters and communicators close the gap between data and action, greatly enhancing an organization's value.

Conclusion: Developing a Diverse Skill Set for Success in Data Analytics

Both technical and soft skills must be dedicated in order to master data analytics. Students that master these skills will be at the forefront of the field, from core tools like SQL and visualization libraries to programming languages like Python and R. With data-driven professions becoming more prevalent across industries, these abilities make up a potent toolkit that can lead to fulfilling jobs and worthwhile projects.

These fundamental domains provide a solid basis for students who want to succeed in data analytics in 2025. Although mastery may be a difficult journey, every new skill you acquire will help you become a more proficient, adaptable, and effective data analyst.

Are you prepared to begin your data analytics career? Enrol in the comprehensive data analytics courses that CACMS Institute offers in Amritsar. With flexible scheduling to accommodate your hectic schedule and an industry-relevant curriculum that gives you the tools you need to succeed, our hands-on training programs are made to be successful.

In order to guarantee that you receive a well-rounded education that is suited for the demands of the modern workforce, our programs cover fundamental subjects including Python, R, SQL, Power BI, Tableau, Excel, Advanced Excel, and Data Analytics in Python.

Don't pass up this chance to improve your professional prospects! Please visit the link below or call +91 8288040281 for more information and to sign up for our data analytics courses right now!

#cacms institute#techskills#cacmsinstitute#techeducation#data analytics courses#data analytics training in amritsar#data analytics course#big data analytics#digital marketing training in amritsar#python courses in Amritsar#Python training in Amritsar#certification#data science course#tableau course in Amritsar

0 notes

Text

PostgreSQL 17 New Features Now Available In Cloud SQL

PostgreSQL 17

PostgreSQL 17 release date

The most recent iteration of the most sophisticated open source database in the world, PostgreSQL 17, was released on 29 September 2024, according to a statement from the PostgreSQL Global Development Group.

Building on decades of open source development, PostgreSQL 17 enhances performance and scalability while adjusting to new patterns in data storage and access. Significant overall performance improvements are included in this PostgreSQL release, including improved query execution for indexes, storage access optimizations and enhancements for high concurrency workloads, a redesigned memory management implementation for vacuum, and speedups in bulk loading and exports. New workloads and essential systems alike can benefit from PostgreSQL 17’s features, which include improvements to the developer experience with the SQL/JSON JSON_TABLE command and logical replication changes that make managing high availability workloads and major version upgrades easier.

PostgreSQL 17 features

PostgreSQL 17’s latest features are now accessible via Cloud SQL.

Google Cloud is announcing that PostgreSQL 17 is now supported in Cloud SQL, with a ton of new features and beneficial improvements in five important areas:

Safety

Experience as a developer

Performance

Equipment

Observability

We go into great detail about these topics in this blog article, offering helpful advice and real-world examples to help you get the most of PostgreSQL 17 on Cloud SQL

Increased protection

PG_maintain role and MAINTAIN privilege

The MAINTAIN permission, introduced in PostgreSQL 17, enables you to conduct maintenance operations on database objects, including VACUUM, ANALYZE, REINDEX, and CLUSTER, even if you are not the object’s owner. This gives you more precise control over database upkeep duties.

Additionally, PostgreSQL 17 adds a predefined role called pg_maintain that enables you to do maintenance actions on all relations without explicitly having MAINTAIN privileges on those objects.

Improvements to the developer experience

MERGE… COMING BACK

A potent addition to PostgreSQL 17, the MERGE command enables programmers to carry out conditional updates, inserts, or deletions in a single SQL expression. By using fewer distinct queries, this command not only makes data handling easier but also boosts performance.

Create a regular PostgreSQL table from JSON input

PostgreSQL 17’s JSON_TABLE function makes working with JSON data easier by introducing a more user-friendly method of converting it into a regular table format. For converting JSON documents into tabular form, JSON_TABLE provides a simpler and more standardized mechanism than previous approaches like json_to_recordset(), which can be difficult to use.

Enhancements in performance

Better vacuum memory structure

To store tuple IDs during VACUUM operations, PostgreSQL 17 provides TidStore, a new and more effective data structure. This greatly lowers the amount of memory used by replacing the earlier array-based method. By using this method, the 1GB memory consumption limit while vacuuming the table is also removed.

A few new columns have been added to the pg_stat_progress_vacuum system view to offer more information on the vacuum process, and the names of a few existing columns have been modified.

Enhanced I/O efficiency

Multiple consecutive blocks can now be read from disk into shared buffers with a single system call with an improvement made to the ReadBuffer API in PostgreSQL 17.

Because it lowers the overhead associated with many separate read operations, this innovation is especially helpful for workloads that require reading numerous consecutive blocks. Additionally, it facilitates the rapid update of planner statistics in the ANALYZE process.

To manage your maximum I/O size for operations that combine I/O, PostgreSQL 17 also adds io_combine_limit. By default, 128kB is used.

Better handling of IS [NOT] NULL

Optimizations are introduced in PostgreSQL 17 to minimize the needless evaluation of IS NULL and IS NOT NULL clauses. By eliminating redundant tests, this modification improves query efficiency and speeds up operations, particularly in complex queries or when many conditions include NULL values.

Because of this, PostgreSQL doesn’t actually need to examine the table data because it can instantly ascertain that the criterion “id IS NULL” will never be true. Since all entries in the id column are assured to be non-null, the One-Time Filter: false implies that the criteria “id IS NULL” is not satisfied for any rows.

Enhancements to the tooling

Better Verbosity Control and COPY Error Handling

With features like ON_ERROR and LOG_VERBOSITY, PostgreSQL 17 enhances the COPY command. During data import processes, these parameters enable you to better understand skipped rows and handle problems more gently.

cat sample_data.csv 1,John,30 2,Mary,abc 3,Sam,25 4,Amy,35

Since age (an integer) is the third column in this case, “abc” is an invalid data type for integers.

Import the data with below command

postgres=> \COPY test_copy from sample_data.csv (ON_ERROR ignore, LOG_VERBOSITY verbose , format csv); NOTICE: skipping row due to data type incompatibility at line 2 for column age: “abc “ NOTICE: 1 row was skipped due to data type incompatibility COPY 3

Additionally, PostgreSQL 17 adds a new column to the pg_stat_progress_copy view called tuples_skipped. This field indicates how many tuples were missed due to the presence of faulty data.

pg_dump, pg_dumpall, and pg_restore using the option –filter

A more precise control over which items are included or excluded in a dump or restoration process is made possible by PostgreSQL 17’s –filter option.

Using the –transaction-size option in pg_restore

With the addition of the –transaction-size option to the pg_restore command, you can commit after processing a predetermined number of items. For more manageable transactions, you can divide the restore operation into smaller sets of objects by using the –transaction-size option.

Improved observability

pg_wait_events system view

Information about events that are making processes wait is available through the new pg_wait_events system view. Finding performance bottlenecks and resolving database problems can both benefit from this.

A basic query ought to resemble this:

SELECT * FROM pg_wait_events LIMIT 5;

You may learn a lot about your PostgreSQL database’s performance and pinpoint areas for development by combining pg_wait_events with pg_stat_activity.

postgres=> select psa.datname, psa.usename, psa.state, psa.wait_event_type, psa.wait_event, psa.query, we.description from pg_stat_activity psa join pg_wait_events we on psa.wait_event_type = we.type and psa.wait_event = we.name;

This is an example of the output from the aforementioned command, which contains shortened rows for relevance and projected columns:

|wait_event_type | query | description +—————-+————————————-+—————————- | Client | update pgbench_accounts set abalance=300; | Waiting to read data from the client | IPC | checkpoint; | Waiting for a checkpoint to start . . .

pg_stat_checkpointer system view

The pg_stat_checkpointer system view gives useful details about the activity and performance of the checkpoint process, including how often checkpoints occur, how much data is written during checkpoints, and how long it takes to finish checkpoints.

Run the following query to get insights on checkpointer activity:SELECT * FROM pg_stat_checkpointer;

By returning a record with a variety of checkpoint process metrics, this query enables you to track and evaluate the effectiveness of checkpoints within the PostgreSQL instance.

In brief

In conclusion, Cloud SQL for PostgreSQL 17 offers notable improvements in tooling, observability, performance, security, and developer experience. The purpose of these improvements is to increase database management capabilities and optimize database operations. For a comprehensive list of new features and specific details, consult the official release notes.

To take advantage of these potent updates, it urges you to test out Cloud SQL PostgreSQL 17 right now.

Read more on Govindhtech.com

0 notes

Text

VeryUtils Excel Converter Command Line can Convert Excel files via command line

VeryUtils Excel Converter Command Line can Convert Excel files via command line. In today's data-driven world, efficiently managing and converting spreadsheet files across various formats is crucial for businesses and individuals alike. VeryUtils Excel Converter Command Line is an all-encompassing tool designed to handle this challenge with ease, offering robust functionality, speed, and a wide range of supported formats. Whether you need to convert Excel spreadsheets, CSV files, or OpenOffice documents, this powerful software ensures seamless and accurate conversions without the need for Microsoft Excel.

Comprehensive Format Support VeryUtils Excel Converter Command Line is equipped to convert an extensive list of input formats including Excel (XLS, XLSX, XLSM, XLT, XLTM), OpenOffice (ODS), XML, SQL, WK2, WKS, WAB, DBF, TEX, and DIF. This versatility means you no longer need multiple converters for different file types. The output formats are equally impressive, ranging from DOC, DOCX, PDF, HTML, and TXT to ODT, ODS, XML, SQL, CSV, Lotus, DBF, TEX, DIFF, SYLK, and LaTeX.

Key Features and Benefits Wide Range of Conversions

Excel to Multiple Formats: Convert Excel spreadsheets to PDF, HTML, TXT, DOC, and more.

CSV Conversion: Batch convert CSV files to DOC, PDF, HTML, TXT, XLS, DBF, and XML.

OpenOffice Compatibility: Easily convert ODS files to Microsoft XLS documents, ensuring compatibility across different software.

Preserves Document Layout VeryUtils Excel Converter Command Line strictly maintains the layout of the original document, delivering an exact copy in the new format. This ensures that all tables, charts, and formatting remain intact after conversion.

Customization Options The software includes a range of customization options:

Sheet Conversion: Convert each sheet into a separate file.

PDF User Permissions: Set permissions to protect PDF files from being modified or copied.

CSV to TXT: Choose encoding options during batch conversion.

Header and Column Formatting: Make headers bold and autofit columns when exporting CSV to XLS.

Performance and Efficiency

Fast Batch Conversion: Convert large volumes of files quickly with minimal effort.

Command Line Automation: Automate conversions using command line or COM/ActiveX interfaces, enhancing workflow efficiency.

File Management: Move or delete files after processing, skip already processed files, and specify sheets or ranges to convert.

Secure and Protected PDFs When converting Excel to PDF, you can set user permissions, password-protect files, and even sign documents with a digital signature. The software supports PDF, PDF/A, and non-searchable PDFs upon request.

Easy Integration and Usage VeryUtils Excel Converter Command Line is designed for ease of use and integration:

No GUI: The command line interface allows for seamless integration into other applications and automation scripts.

Developer License: With a Developer or Redistribution License, you can integrate this software into your own products and distribute it royalty-free.

Command Line Examples Here are some examples of how you can use the VeryUtils Excel Converter Command Line: ConvertExcel.exe --export-options "separator=; format=raw" sample.xlsx _out_sample-xlsx2txt.txt ConvertExcel.exe sample.xlsx _out_sample-xlsx2csv.csv ConvertExcel.exe sample.xlsx _out_sample-xlsx2xls.xls ConvertExcel.exe sample.xlsx _out_sample-xlsx2pdf.pdf ConvertExcel.exe _out_sample.csv _out_sample-csv2xls.xls ConvertExcel.exe _out_sample.csv _out_sample-csv2xlsx.xlsx ConvertExcel.exe sample.xlsx _out_sample-xlsx2html.html ConvertExcel.exe sample.xlsx _out_sample-xlsx2ods.ods ConvertExcel.exe --merge-to=_out_merged.xls _out_sample-xlsx2csv.csv sample.xlsx _out_sample-xlsx2xls.xls ConvertExcel.exe --export-options "paper=iso_a2_420x594mm" sample.xlsx _out_sample-xlsx2pdf-paper-size.pdf ConvertExcel.exe --export-file-per-sheet _out_merged.xls _out_files-per-sheet-%n-%s.csv

Conclusion VeryUtils Excel Converter Command Line stands out as a comprehensive and efficient solution for all your spreadsheet conversion needs. With its extensive format support, robust performance, and user-friendly command line interface, it simplifies the process of managing and converting spreadsheet files. Whether you're a business looking to streamline data processing or an individual needing reliable file conversions, VeryUtils Excel Converter Command Line is the perfect tool for the job. Download it today and experience the convenience of having all your spreadsheet conversions handled by one powerful tool.

0 notes

Text

Top 7 Skills you’ll Master in a Full Stack Developer Course

The tech world is evolving rapidly—and so are the roles within it. One role that continues to grow in demand is that of a full-stack developer. These professionals are the backbone of modern web and software development. But what exactly does it take to become one? Enrolling in a full-stack developer course can be a game-changer, especially if you're someone who enjoys both the creative and logical sides of building digital solutions.

In this article, we'll explore the top 7 skills you’ll master in a full-stack developer course—skills that not only make you job-ready but also turn you into a valuable tech asset.

1. Front-End Development

Let’s face it: first impressions matter. The front-end is what users see and interact with. You’ll dive deep into the languages and frameworks that make websites beautiful and functional.

You’ll learn:

HTML5 and CSS3 for content and layout structuring.

JavaScript and DOM manipulation for interactivity.

Frameworks like React.js, Angular, or Vue.js for scalable user interfaces.

Responsive design using Bootstrap or Tailwind CSS.

You’ll go from building static web pages to creating dynamic, responsive user experiences that work across all devices.

2. Back-End Development

Once the front-end looks good, the back-end makes it work. You’ll learn to build and manage server-side applications that drive the logic, data, and security behind the interface.

Key skills include:

Server-side languages like Node.js, Python (Django/Flask), or Java (Spring Boot).

Building RESTful APIs and handling HTTP requests.

Managing user authentication, data validation, and error handling.

This is where you start to appreciate how things work behind the scenes—from processing a login request to fetching product data from a database.

3. Database Management

Data is the lifeblood of any application. A full-stack developer must know how to store, retrieve, and manipulate data effectively.

Courses will teach you:

Working with SQL databases like MySQL or PostgreSQL.

Understanding NoSQL options like MongoDB.

Designing and optimising data models.

Writing CRUD operations and joining tables.

By mastering databases, you’ll be able to support both small applications and large-scale enterprise systems.

4. Version Control with Git and GitHub

If you’ve ever made a change and broken your code (we’ve all been there!), version control will be your best friend. It helps you track and manage code changes efficiently.

You’ll learn:

Using Git commands to track, commit, and revert changes.

Collaborating on projects using GitHub.

Branching and merging strategies for team-based development.

These skills are not just useful—they’re essential in any collaborative coding environment.

5. Deployment and DevOps Basics

Building an app is only half the battle. Knowing how to deploy it is what makes your work accessible to the world.

Expect to cover:

Hosting apps using Heroku, Netlify, or Vercel.

Basics of CI/CD pipelines.

Cloud platforms like AWS, Google Cloud, or Azure.

Using Docker for containerisation.

Deployment transforms your local project into a living, breathing product on the internet.

6. Problem Solving and Debugging

This is the unspoken art of development. Debugging makes you patient, sharp, and detail-orientated. It’s the difference between a good developer and a great one.

You’ll master

Using browser developer tools.

Analysing error logs and debugging back-end issues.

Writing clean, testable code.

Applying logical thinking to fix bugs and optimise performance.

These problem-solving skills become second nature with practice—and they’re highly valued in the real world.

7. Project Management and Soft Skills

A good full-stack developer isn’t just a coder—they’re a communicator and a team player. Most courses now incorporate soft skills and project-based learning to mimic real work environments.

Expect to develop:

Time management and task prioritisation.

Working in agile environments (Scrum, Kanban).

Collaboration skills through group projects.

Creating portfolio-ready applications with documentation.

By the end of your course, you won’t just have skills—you’ll have confidence and real-world project experience.

Why These Skills Matter

The top 7 skills you’ll master in a full-stack developer course are a balanced mix of hard and soft skills. Together, they prepare you for a versatile role in startups, tech giants, freelance work, or your own entrepreneurial ventures.

Here’s why they’re so powerful:

You can work on both front-end and back-end—making you highly employable.

You’ll gain independence and control over full product development.

You’ll be able to communicate better across departments—design, QA, DevOps, and business.

Conclusion

Choosing to become a full-stack developer is like signing up for a journey of continuous learning. The right course gives you structured learning, industry-relevant projects, and hands-on experience.

Whether you're switching careers, enhancing your skill set, or building your first startup, these top 7 skills you’ll master in a Full Stack Developer course will set you on the right path.

So—are you ready to become a tech all-rounder?

0 notes

Text

Merging Data (MERGE) in T-SQL Programming Language

MERGE Statement in T-SQL: A Complete Guide to Merging Data in SQL Server Hello, SQL enthusiasts! In this blog post, I will introduce you to MERGE Statement in T-SQL – one of the most powerful and versatile commands in T-SQL: the MERGE statement. The MERGE statement allows you to perform INSERT, UPDATE, and DELETE operations in a single query, making it an efficient tool for synchronizing data…

0 notes